Now I calibrate the monitor to 6500K and 120 cd/m2 (still with the calibration device in the center of the screen). In the middle of the screen, I've measured a color temperature of 5800K, a brightness of 122 cd/m2, and delta E deviations in a specific color space for many different colors (and values). To explain this, I will simply give you an example: BUT! Here’s the next “problem” ( Part 4) and why I do not recommend in general to buy such a device, as long as you don't need color accuracy for print, post-production or similar.Īs already mentioned, gaming monitors are not developed to offer a “perfect” brightness and color temperature homogeneity. The only way to calibrate for 6500K, gamma 2.2 and 120cd/m2 is when you purchase a hardware device to measure your individual unit.

#LCD MONITOR GAMMA TEST SOFTWARE#

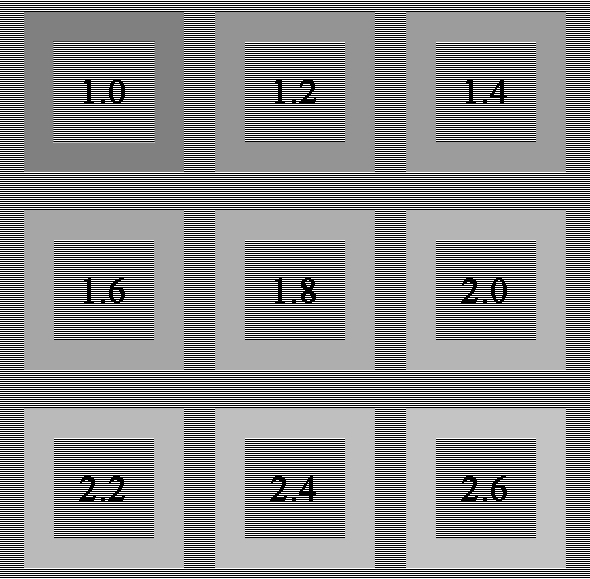

brightness as well as small deviations within gamma settings so that you will not come to the same result as I or other reviewers do. This information will give you the logical result, that your unit will have a different color temperature and max. “Each individual unit will differ within the native color temperature (RGB 100/100/100) as well as in the max brightness” Now you do some research, see some recommendations on the internet, buy the monitor I've tested, and finally, you ask me for optimal settings. Now I'm calibrating a monitor to 6500K (color temperature / white point) and 120 cd/m2 (brightness) with following settings: brightness of 382 cd/m2 (Brightness settings 100 of 100). My tested unit has a native color temperature of 6900K (RGB settings 100/100/100) and a max.

I want to tell you about a mistake many people make, a misunderstanding that occurs due to a lack of information on the internet. It will not be the answer you’re looking for yet the answer you need. In this article, I would like to talk about optimal monitor settings, as I am literally overwhelmed by this specific question and it takes a lot of time to answer the same question again and again.

0 kommentar(er)

0 kommentar(er)